Make First Function Wait for Second Function to Execute Before Running Again Python

Stop Waiting! Start using Async and Await!

Introduction

The nearly important ingredient for us data scientists is data. How do we get the data we need into our programs? We practice that through some I/O-operations like querying a database, loading files from our disk, or downloading data from the spider web through HTTP requests. These I/O-operations can take quite some time where nosotros but hang around waiting for the data to be accessible. This gets even worse when nosotros have to load multiple files, do multiple database queries, or perform multiple HTTP requests. Most often, we perform these operations sequentially, which leads to executing 100 I/O-operations in full taking 100 times longer than executing a unmarried operation. Now waiting is not only annoying only becomes a real pain. Just wait, not as well long :), does it make sense to wait for the response to a request before nosotros burn up another completely independent request? Or as a daily life example, when you write an electronic mail to two persons, would you wait to send the email to the second person until yous've received a response from the first one? I guess not. In this article, I want to show y'all how to significantly reduce waiting time for IO-bound problems using the Asynchronous IO, short AsyncIO, programming paradigm in Python. I will not dive too much into technical details, but rather go along information technology adequately basic and bear witness you a small code example, which hopefully facilitates your understanding.

The Trouble

Presume nosotros want to download three different files from a server. If we do that sequentially, nearly of the time our CPU is idle waiting for the server to respond. If the response time dominates, the total execution time is given as the sum over the individual response times. Schematically, this looks similar shown in Picture 1.

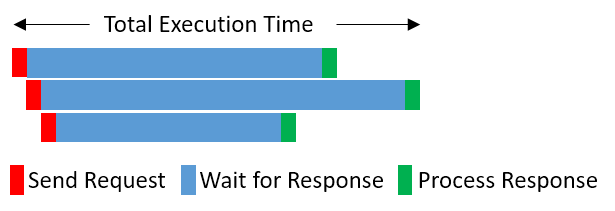

I think an idle CPU sounds suboptimal. Wouldn't it be better to transport the requests sequentially, be idle until all those requests take finished and combine all the responses? In that scenario, again assuming the response time to dominate, the total execution time is roughly given equally the maximum response time out of all requests. Schematically this is shown in Film 2.

Annotation that the blue bar just visualizes the time between a asking beingness sent and the response is available. Instead of just having an idle CPU in that timeframe, nosotros should make use of information technology and perform some work. That'due south exactly what nosotros are doing here. When a response from one request arrives nosotros already procedure it while still waiting for the other responses to arrive. That sounds great, doesn't information technology? So how tin can nosotros write code that makes employ of that? As already spoiled in the introduction, nosotros can utilize AsyncIO to that end.

The Solution

Asynchronous is a higher-level programming prototype, where you lot start a task, and while you don't have the result of that task, you do some other work. With this, AsyncIO gives a feeling of concurrency despite using a unmarried thread in a single process. The magic ingredients to make that piece of work are an issue loop, coroutines, and awaitable objects which are coroutine-objects, Tasks, and Futures. Just very briefly

- An result loop orchestrates the execution and advice of awaitable objects. Without an upshot loop, we cannot utilize awaitables, hence, every AsyncIO program has at least 1 event loop.

- A native coroutine is a python function defined with async def. You can think of it as a pausible function that can hold land and resume execution from the paused state. You lot pause a coroutine by calling await on an awaitable. By pausing, information technology releases the menstruation of control back to the effect loop, which enables other work to be done. When the effect of the awaitable is gear up, the result loop gives back the period of control to the coroutine. Calling a coroutine returns a coroutine-object, that must be awaited to get the actual effect. Finally, it's important to remember that you lot tin call wait simply inside a coroutine. Your root coroutine needs to be scheduled on the event loop by a blocking call.

Below you find some very basic code that hopefully facilitates your understanding

import asyncio async def sample_coroutine():

return 1212 async def main_coroutine():

coroutine_object = sample_coroutine()

# With await, nosotros stop execution, give control back to the

# eventloop, and come up back when the issue of the

# coroutine_object is bachelor.

result = await coroutine_object

affirm upshot == 1212 # Blocking call to get the event loop, schedule a task, and shut

# the consequence loop

asyncio.run(main_coroutine())

If y'all are interested in more comprehensive introductions, I refer you to this, this, and that.

The Example

Plenty theory, permit's attempt it out. Prerequisite to utilise native coroutines is Python three.5+, however, asyncio is evolving rapidly then I would suggest using the virtually recent Python version which was 3.vii+ at the time of writing. As an example, I download a couple of dog images. For comparing, I practise the same operations in both synchronous, and asynchronous fashion. To execute HTTP requests, I apply Requests for the synchronous part and AIOHTTP for the async part. I have intentionally left out any kind of error checking to avoid convoluting the code.

The Code

Then let's first install the necessary modules

pip install aiohttp requests Next, I import the modules and add together a small helper part

import asyncio

import time

from typing import Any, Iterable, List, Tuple, Callable

import os

import aiohttp

import requests def image_name_from_url(url: str) -> str:

return url.split("/")[-1]

Now, given a bunch of image URLs, we tin can sequentially download and store them every bit a List of pairs (epitome-proper name, byte array) past

def download_all(urls: Iterable[str]) -> Listing[Tuple[str, bytes]]:

def download(url: str) -> Tuple[str, bytes]:

print(f"Beginning downloading {url}")

with requests.Session() as southward:

resp = southward.get(url)

out= image_name_from_url(url), resp.content

print(f"Done downloading {url}")

return out render [download(url) for url in urls]

I have added a few print statements such that you see what happens when executing the function. So far so good, zip new upward until now, but here comes the async version

async def donwload_aio(urls:Iterable[str])->Listing[Tuple[str, bytes]]:

async def download(url: str) -> Tuple[str, bytes]:

impress(f"Start downloading {url}")

async with aiohttp.ClientSession() as south:

resp = await due south.get(url)

out = image_name_from_url(url), await resp.read()

impress(f"Washed downloading {url}")

return out return await asyncio.gather(*[download(url) for url in urls])

Ah ha, this looks nearly identical, apart from all those async and wait keywords. Let me explain to you what'south happening here.

- download_aio is a coroutine as information technology is defined with async def. Information technology has to be a coroutine considering nosotros call other coroutines within it.

- In the download coroutine, nosotros create a Session object using an async context managing director (async with) and wait the result of the get request. At this signal, we perform the potentially long-lasting HTTP request. Through await we pause execution and give other tasks the chance to piece of work.

- asyncio.assemble is probably the about of import part here. Information technology executes a sequence of awaitable objects and returns a list of the gathered results. With this function, you lot can achieve a feeling of concurrency as shown in Flick two. Yous cannot schedule too many coroutines with gather, it's in the lodge of a few hundreds. When y'all run into issues with that, you can still partition the calls into smaller sized chunks that you pass to get together 1 past 1. Like calling a coroutine returning an awaitable, calling get together also returns an awaitable that you accept to await to become the results.

Allow's put that together, run it, and compare how long it takes to download the doggys.

The Event

Below you notice the lawmaking to perform both the synchronous and asynchronous Http calls.

if __name__ == "__main__":

# Go list of images from dogs API

URL = "https://canis familiaris.ceo/api/brood/hound/images"

images = requests.get(URL).json()["message"] # Take just 200 images to non come across bug with gather

reduced = images[:200]

st = time.fourth dimension()

images_s = download_all(reduced)

print(f"Synchronous exec took {time.fourth dimension() - st} seconds") st = time.time()

images_a = asyncio.run(donwload_aio(reduced))

print(f"Asynchronous exec took {time.fourth dimension() - st} seconds")

In a bit simplified version, the synchronous version prints out

S tart i, End 1, Start ii, Terminate two, … , Beginning 200, End 200

which reflects the flow shown in Picture 1. The async counterpart prints out something similar

Showtime i, Starting time two, …, Start 200, End 3, End 1, …, Terminate 199

which reflects what is shown in Picture two. Awesome, that is what I accept promised you lot. Just I think that alone is not very convincing. And then, save the all-time for last and permit's expect at the execution times. To download 200 images on my car, the synchronous call took 52.seven seconds, while the async i took 6.five seconds which is virtually viii times faster. I similar that! The speedup varies based on the maximum download fourth dimension of an private particular, which depends on the item's size and the load the server can handle without slowing down.

Take-Aways

- Use AsnycIO for IO-bound issues that you desire to accelerate. There are tones of modules for IO-bound operations like Aioredis, Aiokafka, or Aiomysql merely to mention a few. For a comprehensive listing of higher-level async APIs visit crawly-asyncio.

- Yous tin can only await a coroutine inside a coroutine.

- You need to schedule your async program or the "root" coroutine by calling asyncio.run in python iii.vii+ or asyncio.get_event_loop().run_until_complete in python 3.5–iii.half dozen.

- Concluding merely most important: Don't look, await!

Hopefully, you've learned something new and can reduce waiting fourth dimension. Thank you lot for post-obit forth and feel free to contact me for questions, comments, or suggestions.

Source: https://towardsdatascience.com/stop-waiting-start-using-async-and-await-18fcd1c28fd0

0 Response to "Make First Function Wait for Second Function to Execute Before Running Again Python"

Post a Comment